Multi AI-Agent systems represent a significant advancement in software development, characterized by two fundamental aspects:

-

Collaborative Intelligence: Multiple AI agents working together to achieve shared objectives, each contributing their specialized capabilities.

-

Dynamic Decision Making: Systems that employ AI-driven tool calling to determine execution paths based on real-time context, rather than following predetermined logic flows.

The dynamic nature of these systems presents a unique challenge: understanding the flow of logic becomes increasingly complex as the system scales. A single query might trigger no tool calls, while another could initiate a cascade of nested interactions where AI agents themselves trigger additional tool calls. This complexity makes system behavior difficult to track and debug.

To address this challenge, I'm introducing AgentGraph, an open-source library that seamlessly integrates with Python or Node.js backends. This tool captures and visualizes LLM interactions and tool calls in real-time, presenting them as an interactive graph that provides clear visibility into system behavior.

Visualizing Agent Interactions

Let's examine a practical example: a database query agent with access to two tools:

- SQLAgent: Converts natural language queries into SQL

- DatabaseAgent: Executes SQL queries and returns results

Here's how the agent graph visualizes different types of interactions:

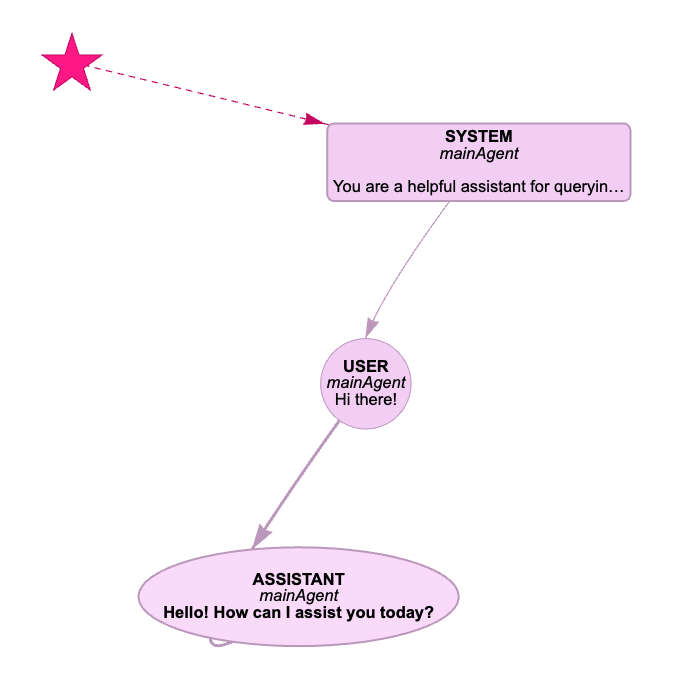

Query 1: "Hi there!"

A simple greeting that doesn't require database access results in no tool calls:

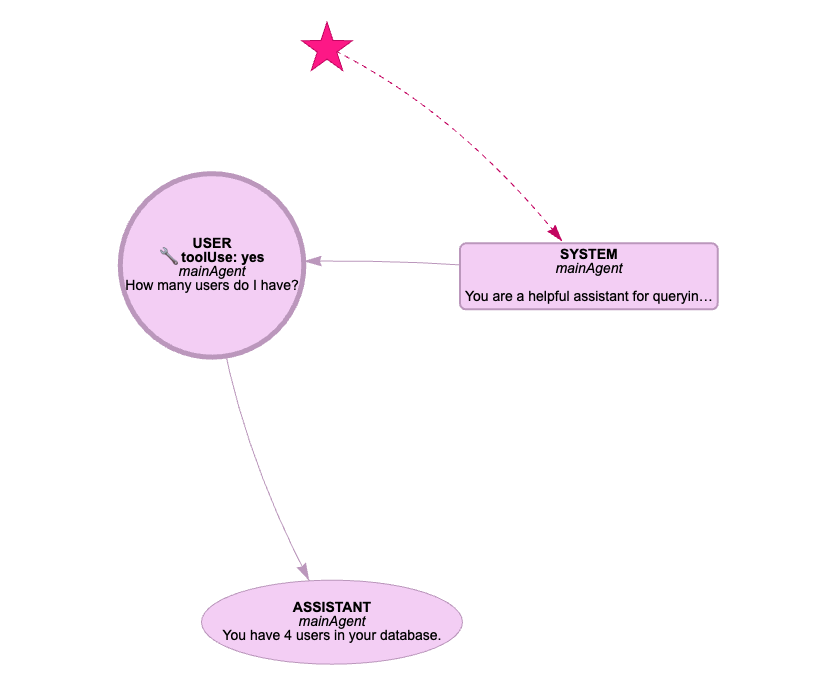

Query 2: "How many users do I have?"

This query triggers a sequence of tool calls:

- SQLAgent converts the query to SQL

- DatabaseAgent executes the query

The main agent's graph shows:

The second bubble contains the user input (also indicating that the LLM used tools), followed by the final response bubble. Clicking the second bubble reveals the tool calls:

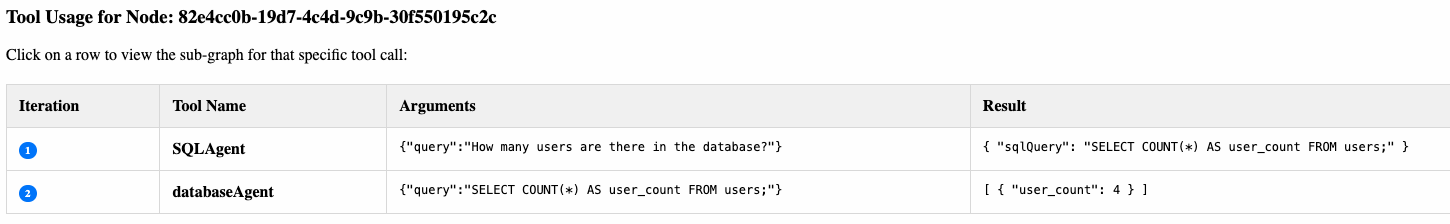

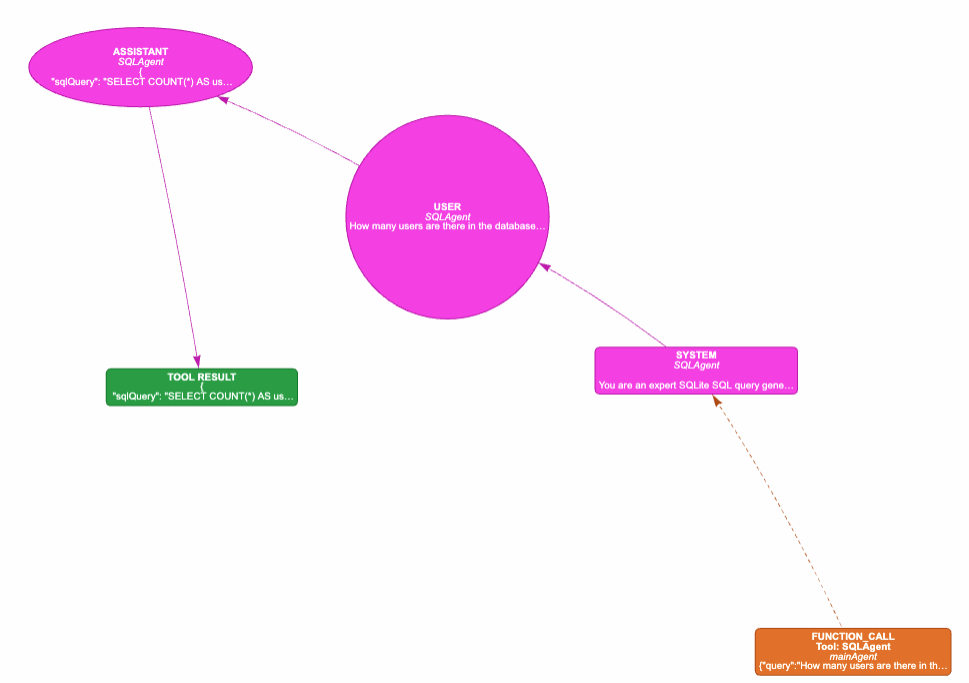

The LLM first calls SQLAgent, then DatabaseAgent. Clicking on the SQLAgent row shows its subgraph:

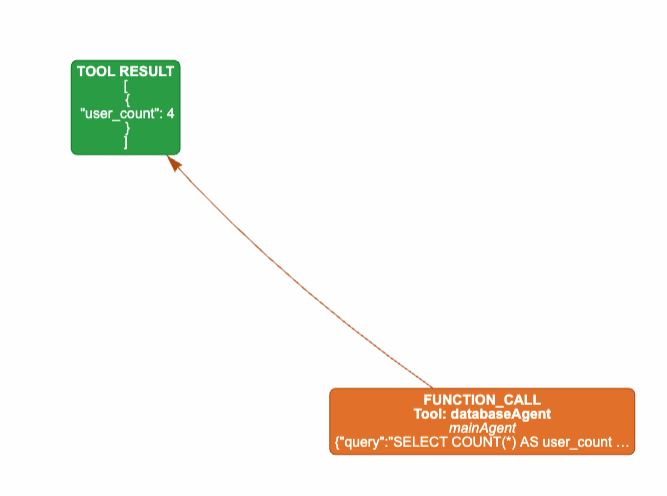

The subgraph displays the tool input (orange box), output (green box), and complete LLM chat history. While this example shows a simple tool, the visualization scales to handle complex nested tool calls. The DatabaseAgent's subgraph shows:

Since this tool doesn't use an LLM, it simply displays the input and output.

Since this tool doesn't use an LLM, it simply displays the input and output.

Integration Guide

Let's walk through implementing AgentGraph in a Node.js backend. You can find the complete example code here. While we'll focus on Node.js, the implementation is similar for Python backends.

To get started, install the AgentGraph package:

1npm install @trythisapp/agentgraphSetting Up the Main Agent

The core of the integration involves wrapping your LLM calls with AgentGraph's callLLMWithToolHandling function. Here's how to set up the main agent:

1import { callLLMWithToolHandling, clearSessionId } from "@trythisapp/agentgraph";

2import { v4 as uuidv4 } from 'uuid';

3

4async function mainAgent(userInput: string, sessionId: string) {

5 let input: OpenAI.Responses.ResponseInput = [{

6 role: "system",

7 content: `You are a helpful assistant for querying a database...`

8 }, {

9 role: "user",

10 content: userInput

11 }];

12

13 try {

14 let response = await callLLMWithToolHandling(

15 "mainAgent", // Agent name for visualization

16 sessionId, // Unique session identifier

17 undefined, // Tool ID (undefined for main agent)

18 async (inp) => { // LLM call function

19 return await openaiClient.responses.create({

20 model: "gpt-4.1",

21 input: inp,

22 tools: [/* tool definitions */],

23 });

24 },

25 input, // Input messages

26 [/* tool implementations */]

27 );

28

29 console.log(response.output_text);

30 } finally {

31 clearSessionId(sessionId); // Clean up session data

32 }

33}Session Management

Each interaction requires a unique session ID to track the conversation flow:

1let sessionId = uuidv4();

2console.log(`Session ID: ${sessionId}. File is saved in ./agentgraph_output/${sessionId}.json`);The session ID serves two purposes:

- Visualization: Groups related interactions in the same graph

- File Output: Creates a JSON file with the complete interaction trace

Always call clearSessionId(sessionId) when the session ends to clean up memory.

Defining Tools

Tools are defined using OpenAI's function calling format within the LLM call:

1tools: [{

2 type: "function",

3 strict: true,

4 name: "SQLAgent",

5 description: "Use this tool when you need to convert the natural language query into a SQL query",

6 parameters: {

7 type: "object",

8 properties: {

9 query: {

10 type: "string",

11 description: "The natural language query to convert into a SQL query"

12 }

13 },

14 required: ["query"],

15 additionalProperties: false

16 }

17}, {

18 type: "function",

19 strict: true,

20 name: "databaseAgent",

21 description: "Use this tool when you need to execute the SQL query and return the results",

22 parameters: {

23 type: "object",

24 properties: {

25 query: {

26 type: "string",

27 description: "The SQL query to execute"

28 }

29 },

30 required: ["query"],

31 additionalProperties: false

32 }

33}]Implementing Tool Handlers

The final parameter of callLLMWithToolHandling is an array of tool implementations:

1[{

2 toolName: "SQLAgent",

3 impl: async ({ query }: { query: string }, toolId: string) => {

4 return runSQLAgent(sessionId, toolId, query);

5 }

6}, {

7 toolName: "databaseAgent",

8 impl: async ({ query }: { query: string }) => {

9 return runDatabaseAgent(query);

10 }

11}]Each tool implementation receives:

- Parameters: The arguments passed by the LLM (destructured from the first parameter)

- Tool ID: A unique identifier for this specific tool call (used for nested visualization)

Creating Nested Agents

For tools that use LLMs internally (like SQLAgent), you create nested agents by calling callLLMWithToolHandling again:

1async function runSQLAgent(sessionId: string, toolId: string, query: string): Promise<string> {

2 let input: OpenAI.Responses.ResponseInput = [{

3 role: "system",

4 content: `You are an expert SQLite SQL query generator...`

5 }, {

6 role: "user",

7 content: query

8 }];

9

10 let response = await callLLMWithToolHandling(

11 "SQLAgent", // Nested agent name

12 sessionId, // Same session ID

13 toolId, // Tool ID from parent call

14 async (inp) => {

15 return await openaiClient.responses.create({

16 model: "gpt-4.1",

17 input: inp,

18 text: {

19 format: {

20 type: "json_schema",

21 name: "sqlQuery",

22 schema: {/* JSON schema */}

23 }

24 }

25 });

26 },

27 input,

28 [] // No tools for this agent

29 );

30

31 return response.output_text;

32}Notice how the nested agent:

- Uses the same

sessionIdto maintain session continuity - Receives the

toolIdfrom the parent to establish the parent-child relationship - Can have its own tools or none at all

Simple Tool Implementation

For tools that don't use LLMs (like databaseAgent), the implementation is straightforward:

1async function runDatabaseAgent(query: string): Promise<string> {

2 return queryDatabase(query); // Direct function call

3}Viewing the Results

After running your agent, AgentGraph seamlessly handles the complete interaction flow:

-

Automatic Tool Orchestration: When the LLM decides to invoke a tool,

callLLMWithToolHandlingautomatically executes the corresponding tool implementation and feeds the result back to the LLM for further processing. -

Complete Trace Capture: Every interaction, tool call, and response is captured and saved to

./agentgraph_output/${sessionId}.json, creating a comprehensive execution trace. -

Interactive Visualization: The generated JSON file can be visualized using the AgentGraph visualizer, which renders your agent interactions as an interactive graph.

The visualization provides:

- Chat Flow: LLM interactions as conversation bubbles

- Tool Calls: Expandable sections showing tool inputs and outputs

- Nested Agents: Subgraphs for tools that use LLMs internally

- Execution Flow: Clear visual representation of the decision-making process

This integration approach provides complete visibility into your multi-agent system without requiring significant changes to your existing code structure.

Take away

As AI agent systems become increasingly sophisticated, the ability to understand and debug their behavior becomes crucial for reliable deployment. AgentGraph addresses this challenge by providing real-time visualization of multi-agent interactions, making complex decision flows transparent and debuggable.

Key benefits of using AgentGraph include:

- Zero-friction Integration: Minimal code changes required to instrument your existing agent systems

- Complete Visibility: Track every LLM interaction, tool call, and nested agent execution

- Interactive Debugging: Visual graph interface that lets you drill down into specific interactions

- Multi-language Support: Works seamlessly with both Python and Node.js backends

- Session Management: Automatic trace capture and file generation for analysis

Whether you're building simple tool-calling agents or complex multi-agent orchestration systems, AgentGraph provides the observability you need to understand, debug, and optimize your AI workflows.

Check out the AgentGraph repository and give it a ⭐ if you find it useful! Your support helps me continue developing tools that make AI development more transparent and accessible.